![]() Virtual Peace (http://virtualpeace.org) is alive as of last evening.

Virtual Peace (http://virtualpeace.org) is alive as of last evening.

For the last gosh-don’t-recall-how-many-months I’ve been working as a Project Collaborator for a project envisioned by the other half (more than half) of the Jenkins Chair here at Duke, Tim Lenoir. For those of you who don’t know Tim, he’s been a leading historian of science for decades now, helping found the History and Philosophy of Science program at Stanford. Tim is notable in part for changing areas of expertise multiple times over his career, and most recently he’s shifted into new media studies. This is the shift that brought him here to Duke and I can’t say enough how incredible of an opportunity it is to work for him. We seem to serve a pivotal function for Duke as people who bring together innovation with interdisciplnarianism.

What does that mean? Well, like the things we study, there are no easy simple narratives to cover it. But I can speak through examples. And the Virtual Peace Project is one such example.

Tim, in his latest intellectual foray, has developed an uncanny and unparalleled understanding of the role of simulation in society. He has studies the path, no, wide swath of simulation in the history of personal computing, and he developed a course teaching contemporary video game criticism in relation to the historical context of simulation development.

It’s not enough to just attempt to study these things in some antiquated objective sense, however. You’ve got to get your hands on these things, do these things, make these things, get some context. And the Virtual Peace project is exactly that. A way for us to understand and a way for us to actually do something, something really fantastic.

It’s not enough to just attempt to study these things in some antiquated objective sense, however. You’ve got to get your hands on these things, do these things, make these things, get some context. And the Virtual Peace project is exactly that. A way for us to understand and a way for us to actually do something, something really fantastic.

The Virtual Peace project is an initiative funded by the MacArthur Foundation and HASTAC through their DML grant program. Tim’s vision was to appropriate the first-person shooter (FPS) interface for immersive collaborative learning. In particular, Virtual Peace simulates an environment in which multiple agencies coordinate and negotiate relief efforts for the aftermath of Hurricane Mitch in Honduras and Nicaragua. The simulation, built on the Unreal game engine in collaboration with Virtual Heroes, allows for 16 people to play different roles as representatives of various agencies all trying to maximize the collective outcome of the relief effort. It’s sort of like Second Life crossed with America’s Army, everyone armed not with guns but with private agendas and a common goal of humanitarian relief. The simulation is designed to take about an hour, perfect for classroom use. And with review components instructors have detailed means for evaluating the efforts and performance of each player.

I can’t say enough how cool this thing is. Each player has a set of gestures he or she may deploy in front of another player. The simulation has some new gaming innovations including proximity-based sound attenuation and full-screen full-session multi-POV video capture. And the instructor can choose form a palette of “curveballs” to make the simulation run interesting. Those changes to the scenario are communicated to each player through a PDA his or her avatar has. I was pushing for heads-up display but that’s not quite realistic yet I guess. 😉

The project pairs the simulation with a course-oriented website. While a significant amount of web content is visible to the public, most of the web site is intended as a sort of simulation preparation and role-assignment course site. We custom-built an authentication and authorization package that is simple and lightweight and user-friendly, a system that allows instructors to assign each student a role in the simulation, track the assignments, distribute hidden documents to people with specific roles, and allow everyone to see everything, including an after-action review, after the simulation run.

Last evening, Wednesday October 08, 2008, the Virtual Peace game simulation enjoyed its first live classroom run at the new Link facility in Perkins Library at Duke University. A class of Rotary Fellows affiliated with the Duke-UNC Rotary Center were the first players in the simulation and there was much excitement in the air.

Next up:

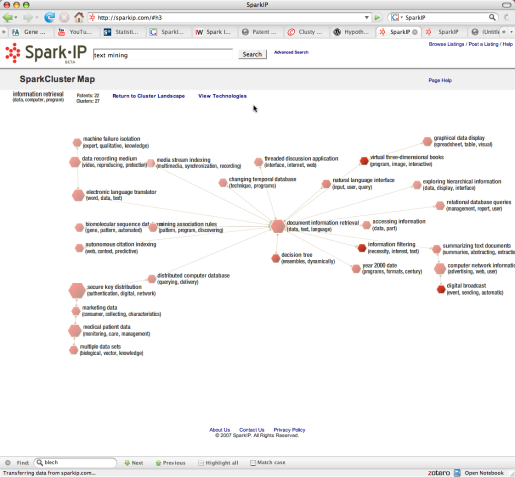

I never miss a beat here it seems, for now I am already onto my next project, something that has been my main project since starting here: reading research and patent corpora mediated through text mining methods. Yes that’s right, in an age where we struggle to get people to read at all (imagine what it’s like to be a poet in 2008) we’re moving forward with a new form of reading: reading everything at once, reading across the dimensions of text. I bet you’re wondering what I mean. Well, I just can’t tell you what I mean, at least, not yet.

At the end of October I’ll be presenting with Tim in Berlin for the “Writing Genomics: Historiographical Challenges for New Historical Developments” workshop at the Max Planck Institute for the History of Science. We’ll be presenting on some results related to our work with the Center for Nanotechnology in Society at UCSB. Basically we’ll be showing some of our methods for analyzing large document collections (scientific research literature, patents) as applied to the areas of bio/geno/nano/parma both in China and the US. We’ll demonstrate two main areas of interest: our semiotic maps of idea flows over time I’ve developed in working with Tim and Vincent Dorie, and the spike in the Chinese nano scientific literature at the intersection of bio/geno/nano/parma. This will be perfect for a historiography workshop. The stated purpose of the workshop:

Although a growing corpus of case-studies focusing on different aspects of genomics is now available, the historical narratives continue to be dominated by the “actors” perspective or, in studies of science policy and socio-economical analysis, by stories lacking the fine-grained empirical content demanded by contemporary standards in the history of science.[…] Today, we are at the point in which having comprehensive narratives of the origin and development of this field would be not only possible, but very useful. For scholars in the humanities, this situation is simultaneously a source of difficulties and an opportunity to find new ways of approaching, in an empirically sound manner, the complexities and subtleties of this field.

I can’t express enough how exited I am about this. The end of easy narratives and the opportunity for intradisciplinary work (nod to Oury and Guattari) is just fantastic. So, to be working on two innovations, platforms of innovation really, in just one week. I told you my job here was pretty cool. Busy, hectic, breakneck, but also creative and multimodal.

You must be logged in to post a comment.